In my previous landing page A/B test case study, I promised to get back to you with a few A/B testing misconceptions.

In this article, I’ll share three of these.

Also, if you haven’t done so yet, I recommend going through these A/B testing articles, too:

- Statistical Significance in A/B testing (and How People Misinterpret Probability)

- A/B Testing Culture (within your Company)

- Landing Page A/B Test (Case Study)

A/B testing misconception #1

Misconception:

“It’s a good rule of thumb to run your A/B tests for one or two weeks.”

Or another similar one:

“You should have a ~1,000 user sample size for each variation of your A/B test to make sure your results are real.”

Truth:

These are the most dangerous A/B testing misconceptions out there. I read them on blogs, I hear them at conferences, I see them in Youtube videos — from time to time. And they are just plain wrong!

The fact is that there are no good rules of thumb about how many users you need for your A/B test. Every time, you’ll have to run your numbers for yourself. There are different calculation methods, but for the simplest one, you will need these three numbers:

- your current conversion rate

- the minimum conversion increase you want to be able to detect

- the minimum statistical significance level you choose (I recommend at least 95% but I usually use 99%)

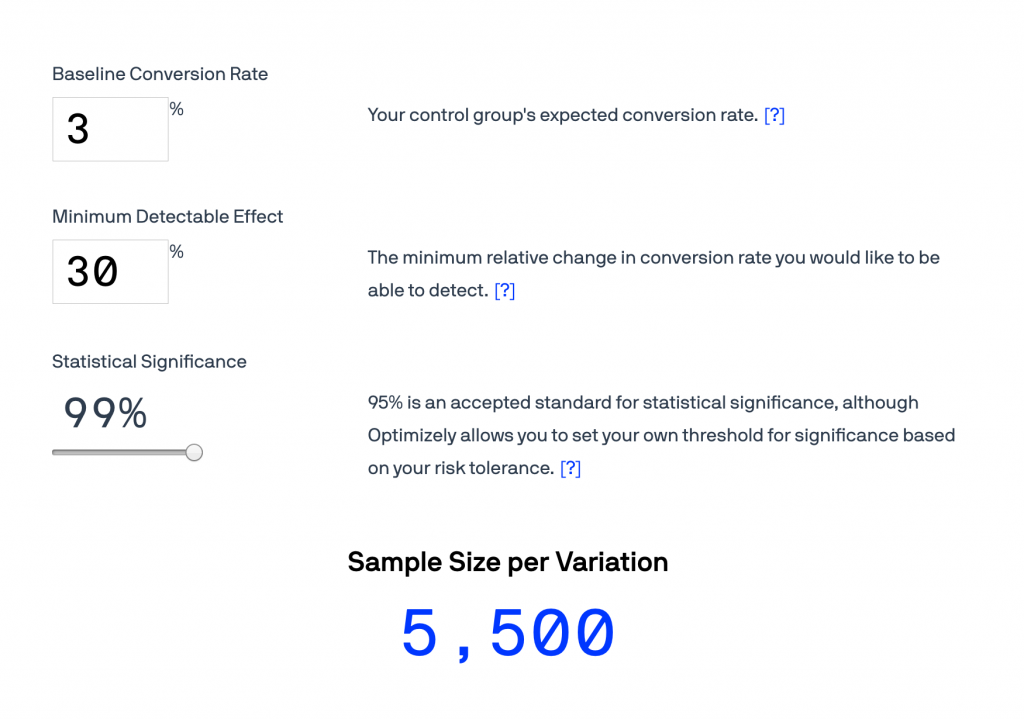

Don’t worry, once you have these numbers, the rest will be very easy. For the actual math part, there are many online calculators available. Take Optimizely’s sample size calculator, for example. You put your numbers into it and you get the sample size that you’ll need for your experiment. Super simple.

Here’s an example:

- current conversion rate: 3%

- minimum expected conversion increase: 30%

- chosen statistical significance level: 99%

And there you go:

With these parameters, you’d need 5,500 users per variation.

Okay, so what does this mean?

It means that if you’ll have two variations (the original “A” and the new “B”), you will need:

5.500 * 2 = ~11,000 users.

And if your website’s traffic is, let’s say, 25,000 users a month, the test should run for at least:

11,000 / 25,000 = ~0.44 month

It’s ~ two weeks.

Of course, this is still an estimate and it has a few moving elements (e.g. the expected conversion increase in the formula), too. But at least this estimate is backed by mathematics and hard facts.

Thus it’s much, much better than a nonsensical rule of thumb about testing with ~1,000 users or for 1 week.

A/B testing misconception #2

Misconception:

“Read these A/B testing case studies and gather some ideas for your next experiment!”

Truth:

You should never collect A/B testing ideas from conferences or from case studies you read online.

Why? Two reasons:

- Survivorship bias.

For example, using red buttons everywhere instead of grey buttons might sound like a great idea. At least, based on all the A/B testing case studies you can find out there. But the thing is that the only people who write case studies about their red-button-tests are the ones who were successful with them. Nobody writes an article about a neutral red-button-test or one with a negative outcome. Even if there might be many more of those cases.

This is what survivorship bias tells us: if you read a case study or a best practice, you can never tell what’s the idea’s real overall success rate. - But this second reason is even more crucial for you:

You should not collect A/B test ideas from case studies because those might have worked out well for the company who wrote about them… But their audience is not the same as yours. Their product is not the same as yours. Their pricing is not the same as yours. And their unique value proposition is not the same as yours.

The same solution to a problem might lead to great success in one business — and might lead to a disaster in another one.

So your best shot is not to copy others but to run your initial research before setting up an experiment. A few methods you can use:

- talking to your (potential) users and customers (and listening to what they say)

- running UX tests

- running data analyses in Google Analytics and/or in your own (SQL) database

- using five second tests

- using fake door tests

- …

Of course, there are many more research methods — but you get the point.

By running an initial research round, you’ll get feedback directly about your product from your own audience.

And there’s no better resource for fruitful A/B test ideas.

The Junior Data Scientist's First Month

A 100% practical online course. A 6-week simulation of being a junior data scientist at a true-to-life startup.

“Solving real problems, getting real experience – just like in a real data science job.”

A/B testing misconception #3

Misconception:

“In an A/B test, you can only change one thing at a time.”

Truth:

You can change more things.

Did you read my landing page A/B test case study in my previous article?

Then you know that not so long ago I ran an experiment where I tested a landing page. And I didn’t change only one picture or one headline. I changed the whole page.

As you can see, I changed more than one thing.

Still, I pulled off a +96% conversion-increase. (Yes, I doubled my conversion rate!)

I have to admit something though.

Changing more than one thing is risky.

Why? Because whether your conversion rate goes up or down (or it stays the same), you won’t know the contribution of the individual elements in that change. Maybe your new images increased the number of registrations but the new wording decreased it. Or maybe it’s the other way around. You won’t know that because you’ll only see the total effect.

With that said, I say, you should take that risk. Especially if you are running a small or medium-size business.

For huge players (Google, Facebook, Amazon, etc.), everything is different. Including running experiments. Let’s say Amazon changes one tiny thing on their website: they move a button up by 30 pixels. And let’s say, it increases their conversion rate by 0.1%. Since they are a huge business:

- They have many, many users, so they will still have enough data points to evaluate that 0.1% conversion-increase as statistically significant.

- At their revenue size, the absolute value of the small 0.1% relative change can still be huge. (E.g. it can cover the salary of 100 or 1,000 new employees.)

But smaller businesses are in a different position. For them the absolute value of a relative 0.1% change is hardly noticeable.

And also very importantly, smaller businesses have a smaller audience size. So a change as small as moving a button with 30 pixels would never bring in a big enough sample size to consider the results statistically significant. (Meaning: you can’t even run an experiment like that.)

The point is, yes, big players with (hundreds of millions of users) should change one thing at a time in their experiments.

But smaller businesses have to take greater risks and aim for greater results.

(Note: the “risk” we are talking here is not a huge risk though. With a losing A/B test you can lose time, sure. But your long-term conversion rates and revenue are protected by the experiment itself. Plus, you can significantly lower the risk by running initial research — see the previous section.)

And one more thing about this whole change-one-thing-at-a-time myth. (Although this might lead to philosophical depths.) What is “one thing” at all?

- Is it one picture? One color? One headline?

- Or one pixel?

- Or one block of your webpage?

- Or a whole section?

- Or a whole landing page?

- Or the whole website?

You decide…

But I recommend following this concept: big business should A/B test individual elements (e.g. pixels, button-texts, button-placements) but small and medium-size businesses should A/B test concepts (e.g. a whole landing page, site-wide messaging, site-wide design).

Conclusion

Okay, these were the 3 A/B testing misconceptions that I wanted to show you. Have you seen other A/B testing myths and misconceptions? Let me know: drop an email!

- If you want to learn more about how to become a data scientist, take my 50-minute video course: How to Become a Data Scientist. (It’s free!)

- Also check out my 6-week online course: The Junior Data Scientist’s First Month video course.

Cheers,

Tomi Mester

Cheers,

Tomi Mester