Many companies are using A/B testing. But not too many of them are using it successfully. I mean, yeah, everyone has a few winning A/B tests that she can be proud of and tell stories about at conferences. But only a few online businesses have achieved real, long-term success via continuous and strategic experimentation. Why is that? For multiple reasons — but an important one is the lack of an A/B testing culture within the company.

A/B testing culture is a two-way switch: either you have it or you don’t

I’ve worked with many online businesses over the years. Here’s the problem I encountered the most:

- We ran a successful A/B test. (Which by the way was often the result of months-long initial research and tests.)

- We increased the conversion rate by 10-20-30%.

- We released the winning variation.

- And then in the following months, I had to fight continuously to keep that winning version alive — and not let it be replaced with random new ideas without A/B testing.

Oh, boy. It wasn’t funny. We spent months to optimize a website. We delivered results. We had numbers – hard facts(!) – that showed that it was good for the business. Yet, some people in management just ignored these facts, and changed the validated design to a new one that was based on instincts, gut feelings or best practices. And of course, they didn’t bother to test it at all. They just wiped out our months of work — and the conversion increase we achieved.

Lose-lose.

And this wasn’t a one-off case. Talking to other data professionals, I know that this happens everywhere from time to time. (Here’s a recent article about a KissMetrics case.)

Over the years, I had to realize that it’s not enough to build a great A/B testing framework and to run winning A/B tests. As a data scientist, you have to think of:

- how your results will stick,

- how your colleagues will become more scientific when releasing new designs or features,

- and eventually: how your organization will become a real experiment-driven organization.

Because A/B testing works only two ways: either everybody at the company is up for it or no one should do it… Either your business has an A/B testing culture, or – in the long-term – your experimentation program will inevitably fail.

In this article, I’ll show you a few key ideas that will help you to win this war.

Evangelize A/B Testing Culture!

Not everybody is a data scientist. You will have colleagues who don’t know why A/B testing is important, or even what it is at all. And that’s okay. As a data professional, a part of your job is to evangelize data-driven methods at your company.

In the beginning, you’ll have to hold a lot of presentations, workshops and one-on-one sessions with stakeholders. You have to explain to your co-workers how A/B testing works and why it’s important. Show them case studies — examples where an experiment led to great success and examples where not having an experiment led to failure. Give them demos and let them understand how the different tools work in real life!

I have done this many times and believe me: when people start to see the point of experimenting, they quickly turn and fall in love with the idea.

Sooner or later, your colleagues will start to admire the knowledge they get out of running A/B tests — and they will get more excited about being more scientific with the things they do. If you have done a good job at this phase, A/B testing will become a part of your company culture.

You think you’ve won the war?

Not at all. That’s only the first step.

And actually, that’s where the hard part begins.

Note: One more thing. It’s much easier to evangelize A/B testing culture at your company when it’s small — and continue experimenting as it grows. Turning a big company (500+ employees, for instance) is not impossible, but it’s more difficult, for sure. That’s why I recommend to every business to start thinking about data-driven methods early on (at a ~10-20 employee company size).

When everybody wants to run experiments

When your co-workers fall in love with the concept, they will want to run their own experiments. They will be able to do so, too, since many online marketing tools provide easy-to-use A/B testing features. And this is a major risk for your business. Because the fact that everybody can run a test, doesn’t mean that everybody can do it properly — or that the results will be scientifically correct, applicable or useful.

“It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so.”

Mark Twain

A/B testing has many pitfalls and I see less experienced people run into the common mistakes all the time, without even realizing it. They get fake results. But since they think that these are the outcomes of a proper experiment, they publish their fake winners. And then they wonder why the conversion rate drops.

If you have people at your organization who are open to the experiment-driven approach: that’s fantastic. But you have to help them run their tests properly.

In my experience, there are three game-changing practices to fix this issue:

- Providing education and framework

- Setting up a strict A/B testing policy

- Ownership

Let me go through these one by one.

Education: Teaching and mentoring your colleagues

Again, not everybody is a data scientist. But everybody who gets involved with an A/B testing project will have to understand the basic concepts like:

- how to set a hypothesis

- how to estimate the business impact and the importance of a test

- how to run initial research

- how to run an A/B test without messing up your whole website

- how to draw conclusions from a test (e.g. the importance of statistical significance)

- and many more things…

Your non-data-scientist colleagues want to run experiments. That’s fine. In fact, that’s great! But they can’t make mistakes that risk the business itself. So as a data professional, it’s your responsibility to educate them, show them the typical mistakes, give them best practices and help them learn the necessary steps of a well-designed and well-executed A/B test. Of course, you won’t have to raise the next generation of data scientists but you’ll have to show your co-workers how they can be more scientific in their day-to-day job.

A Strict A/B Testing Policy

I learned this the hard way: a strict A/B testing policy is key to avoid disasters.

You need a clear framework that everybody at the company can and has to use. It’s best if it’s a combination of easy-to-use checklists and step-by-step processes/guides.

E.g. A few years ago, I worked with a startup that we successfully turned into an experiment-driven one. It was a 200-employee size organization at the time, and many people were running A/B tests – sometimes even in parallel. But before anyone could actually set up and start a test, she had to fill in a form. Something like:

- I have this insight: ________

- It’s supported by qualitative research: ___________

- And it’s also supported by these analyses: ___________

- My hypothesis is: ____________

- So I’ll A/B test this: ____________

- My primary metric that I’ll use to evaluate my experiment is: ____________

- I’m aiming for ____________ % increase.

- I want to run my test on this segment: ____________

- The size of the audience is: ____________

- So my test will run for ____________ weeks:

- …

Of course, this is just a simplified version and only one step of the whole process. But the point is that everyone who ran an A/B test at this company had to fill in this form first. The next step was to send it over to a data scientist who would either approve it or give feedback about what was wrong with it. This way, everybody was allowed to run experiments — but all tests were reviewed by someone who had reliable experience in the topic.

And of course we set up similar guides and checklists for the rest of the process. (Implementing the test, running the test, stopping the test, evaluating the results, implementing the changes, etc.)

By providing a clear, bullet-proof framework like this one, you can significantly reduce the risk of something going terribly wrong.

One more thing to keep in mind. Your framework is only as useful as you make it. So if someone proposes a bad A/B test, it’s the data scientist’s responsibility to turn that idea down. In fact, most A/B test ideas will be turned down. It can happen for multiple reasons, just a few of which are:

- you’ve already run this test before,

- the test interferes with another project,

- it’s unimportant or has no real business impact,

- it’s not backed by enough data and research,

- etc.

Turning someone’s idea down sounds merciless… but remember, the framework is there to protect the business. And by saying ‘no’ to something useless, you free up resources to say ‘yes’ to something useful.

The Junior Data Scientist's First Month

A 100% practical online course. A 6-week simulation of being a junior data scientist at a true-to-life startup.

“Solving real problems, getting real experience – just like in a real data science job.”

Ownership: Someone should oversee all experiments at the company

Larger businesses run tens or even hundreds of A/B tests in parallel. The more experiments run next to each other, the higher the chance that they will interfere with each other and something will go wrong.

I’ve seen some very nasty things… an extreme example would be: the same landing page tested by two different teams at the same time. Of course, it led to a disaster.

Written down, this sounds obvious – but in real life it happens. So you’ll have to prepare for this and make sure that you avoid it.

The best solution is to appoint an owner of your whole A/B testing program. This person (or at larger companies it can be even a team of 3-5 people) oversees every running, run and to-be-run experiments. She knows:

- which team runs

- which A/B tests

- on which part(s) of the website

- for which segment(s) of the audience

- …

In one word: everything.

Preferably, this person is the same who previously evangelized A/B testing culture, educated her colleagues and formed the company’s A/B testing policy. So basically the go-to-person with A/B testing.

Ownership means calling the shots (prioritization, which test can run, which can’t, etc.) and taking the responsibility, too. So if a test is improperly implemented and, say, it breaks the website on mobile devices, this person will take the responsibility.

Occasionally, this position can be stressful, sure — but at a larger business, this is a key role for providing a smooth testing environment.

Enhancing the A/B testing culture — Build a Knowledge Base of the takeaways from previous experiments!

Here’s a pro tip — that I’ve seen implemented only by the best businesses so far. Yet, it’s a great tool to ensure the real long-term success of your A/B testing program.

Before I get into it, it’s important to understand one key idea:

A/B testing, primarily, is not a conversion optimization tool. It’s a research tool!

So your number one priority is not to deliver as many winning experiments as you can but to learn from every experiment you run. Even the losing ones.

And that’s why it’s extremely useful to collect the gathered knowledge somewhere.

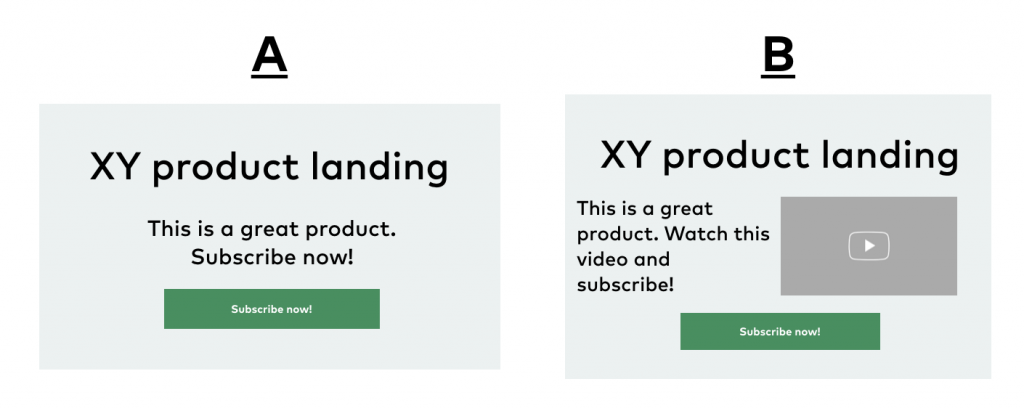

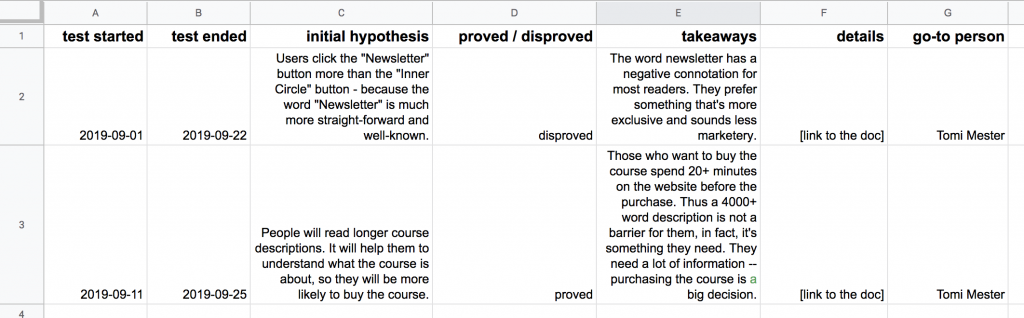

You don’t have to overcomplicate it though. In the beginning, a simple google spreadsheet will do the job. Something like this:

This sample spreadsheet contains:

- the key insights (hypothesis, result, takeaways)

- when did the test run (so one can decide whether the takeaways are still relevant for her or not)

- a link to more detailed documentation, like a one-page summary, of the test (so if someone wants to dig deeper, she can)

You can add more columns, too. (E.g. the description of the different variations, audience size, segments, etc.) But I recommend keeping it simple.

Again, to kickstart your A/B testing knowledge base, creating a spreadsheet is just fine. Later on, you can build your own internal website and give it a nicer and more user-friendly format. But the point is to let your co-workers access the takeaways and the knowledge that is already gathered — so they won’t have to run the same experiments again and again. (Which usually is a complete waste of time, yet it happens so often.)

A knowledge base like this is also great to enhance the A/B testing culture within the company. Just imagine your non-data-scientist colleagues looking at it the first time: “So much information, so useful, so easy to access. This experiment-driven approach is kinda cool.”

Note: the same spreadsheet can be used (by adding more tabs) to build a backlog of ideas for upcoming tests and even to prioritize them… but that’s another topic for another article.

Conclusion

Building a strong A/B testing culture and an experiment-driven organization is not an easy job, and it usually doesn’t happen overnight. But it is definitely worth the time and the effort. In this article, I shared a few good ideas to get started. But if you have more tips and best practices, don’t hesitate to share it with me: drop me an email. (I’ll feature the best ideas in this article!)

- If you want to learn more about how to become a data scientist, take my 50-minute video course: How to Become a Data Scientist. (It’s free!)

- Also check out my 6-week online course: The Junior Data Scientist’s First Month video course.

Cheers,

Tomi Mester

Cheers,

Tomi