“Where can I get data from?”

That’s a totally relevant question, especially if:

- you’re building a data science hobby or side project,

OR - you want to expand your company’s research project with external data sources,

OR - something along those lines… 🙃

So, in this post, I’ll quickly and concisely gather a few options that can be a good starting point. I’ll expand this list in the future and eventually turn it into a proper library of resources.

Let me show you three popular methods.

There are more, but these are the three most commonly used

- Downloading public datasets

- Web scraping

- APIs

Let’s go through them one by one.

(1) Downloading Public Datasets

There are a few websites where the creators simply gather, upload, and make a large number of datasets searchable. These vary in quality, but with thorough searching, you can find some real gems. The only downside is that these datasets are usually not “live,” meaning they don’t update regularly. So, you can typically only analyse a fixed period from the past. However, this is often enough—especially for hobby projects.

Here’s the list:

- Google Dataset Search:

A search engine, just like Google, but for datasets: https://datasetsearch.research.google.com/ - Kaggle.com:

This site primarily hosts data science competitions, but the related datasets are often available to the public: https://www.kaggle.com/datasets - Awesome Public Datasets:

As the name suggests, a great list of public datasets: https://github.com/awesomedata/awesome-public-datasets - DataHub:

Similar to the previous one, but a bit shorter: https://datahub.io/collections - Data.gov:

Data published by the U.S. government. Great for sociological projects (with a strong U.S. focus, of course): https://www.data.gov/ - NYC Open Data:

Same as the previous one, but specifically focused on New York: https://opendata.cityofnewyork.us/

(2) Web Scraping

Web scraping is essentially the process of gathering data from public websites.

It’s like visiting a webpage and manually collecting the data on it (e.g., collecting how many stars each movie has on IMDB). But that process is repetitive, boring, and time-consuming… So, instead of doing it yourself, one of Python’s web scraping packages does it for you. (I mostly use BeautifulSoup.)

I’ve created a 20-minute Python tutorial that demonstrates how this works.

It’s in English, and we use it to find out who the most popular Marvel superhero is by scraping data from Wikipedia in just a few simple steps:

* Is web scraping legal? Of course, if a website explicitly prohibits scraping, you should not scrape it. Where it’s not explicitly forbidden, the legality can be a bit more ambiguous. This isn’t legal advice, and you should consult your own lawyer, but I did some research on the matter as well… Different sources provide different opinions. The best guideline I found, and which is generally applicable, is the principle of “fair use.” “Fair use” is a somewhat tricky legal category to define, but it generally means that if you’re creating new and unique value without harming the original data owner’s interests, web scraping can potentially be legal. Again, this is not legal advice.

(3) APIs

A lot of online applications make some of their data accessible through API connections.

Examples:

- Spotify API: You can get data about songs and artists (e.g., play count, popularity, etc.)

- Coinbase API: You can get cryptocurrency data (e.g., current and historical prices)

- Weather API: You can access weather data (e.g., current and past temperatures, precipitation, etc., based on location)

- …

These API connections provide data directly from the application owners in a structured format. So, it’s guaranteed to be legal, high-quality, and live data.

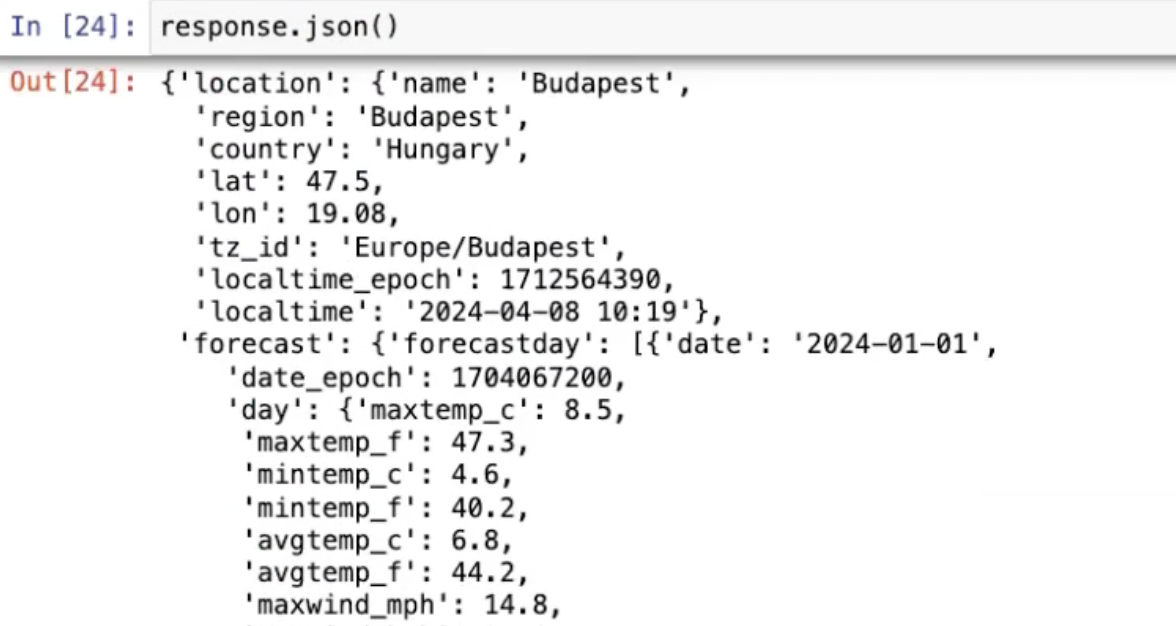

Note: By “structured format,” I mean JSON, which can essentially be converted into a Python dictionary. This might seem intimidating at first, but if you’ve completed something like the Junior Data Scientist Academy, you’ll have no trouble extracting the data you need. Here’s an example of what it might look like:

Drawback: You do need to write Python code for this – though that’s not really a drawback in itself. The real issue is that the documentation for these APIs often has a bit of a “by developers, for developers” vibe… 😅 How can I put this politely? … … Let’s just say user-friendliness isn’t exactly the strength of these guides.

But no worries, I’ve got a demo video for this too, where I walk through the concept using the Coinbase API and the Weather API:

Context: This video was created for the internal competition of the Data Science Club, so you’ll notice a few references to that along the way.

There’s even more…

Collecting data from external sources is an endless topic with endless possibilities. 🙂

In today’s post, I wanted to highlight that there are tons and tons of free datasets available these days, so don’t let a lack of data be the thing that holds your project back!

(Sometime in the future, I plan to write specifically about internal data collection within companies… I’m just not sure how many people would be interested in that topic. If you’re one of them, feel free to drop me an email—I’d appreciate it.)

- If you want to learn more about how to become a data scientist, take my 50-minute video course: How to Become a Data Scientist. (It’s free!)

- Also check out my 6-week online course: The Junior Data Scientist’s First Month video course.

Cheers,

Tomi Mester